Chih-Yao Ma, Zuxuan Wu, Ghassan AlRegib, Caiming Xiong, Zsolt Kira

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019 (Oral)

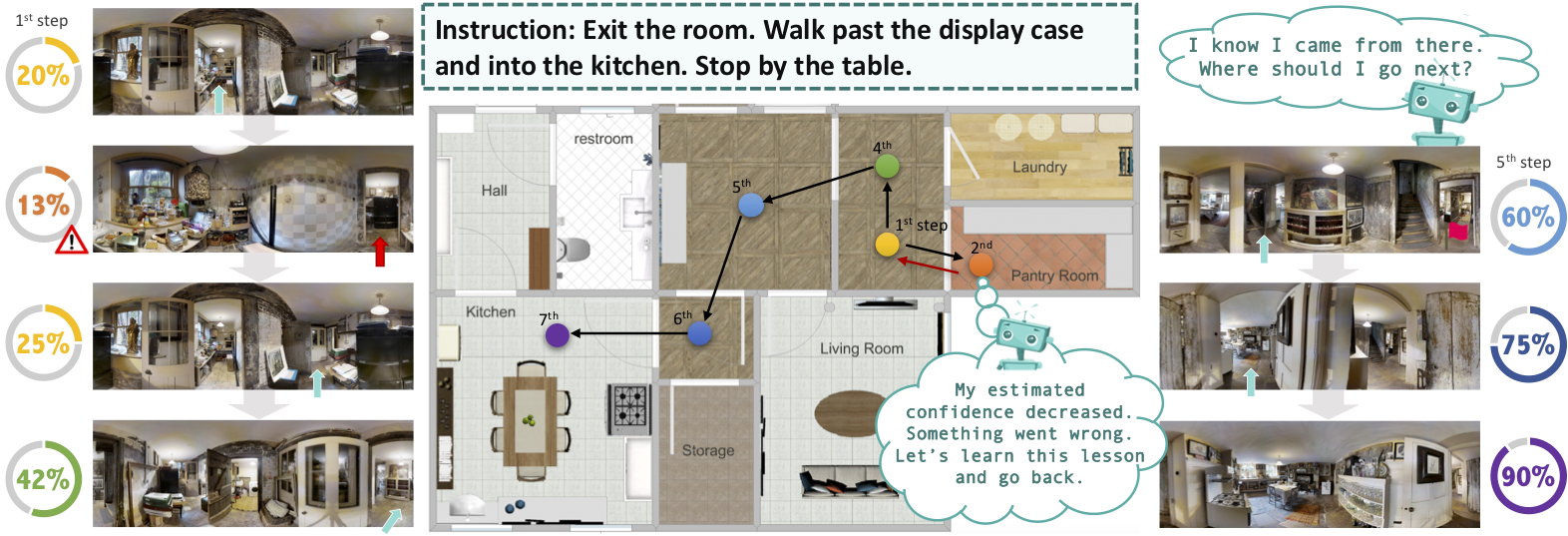

As deep learning continues to make progress for challenging perception tasks, there is increased interest in combining vision, language, and decision-making. Specifically, the Vision and Language Navigation (VLN) task involves navigating to a goal purely from language instructions and visual information without explicit knowledge of the goal. Recent successful approaches have made in-roads in achieving good success rates for this task but rely on beam search, which thoroughly explores a large number of trajectories and is unrealistic for applications such as robotics. In this paper, inspired by the intuition of viewing the problem as search on a navigation graph, we propose to use a progress monitor developed in prior work as a learnable heuristic for search. We then propose two modules incorporated into an end-to-end architecture: 1) A learned mechanism to perform backtracking, which decides whether to continue moving forward or roll back to a previous state (Regret Module) and 2) A mechanism to help the agent decide which direction to go next by showing directions that are visited and their associated progress estimate (Progress Marker). Combined, the proposed approach significantly outperforms current state-of-the-art methods using greedy action selection, with 5% absolute improvement on the test server in success rates, and more importantly 8% on success rates normalized by the path length.

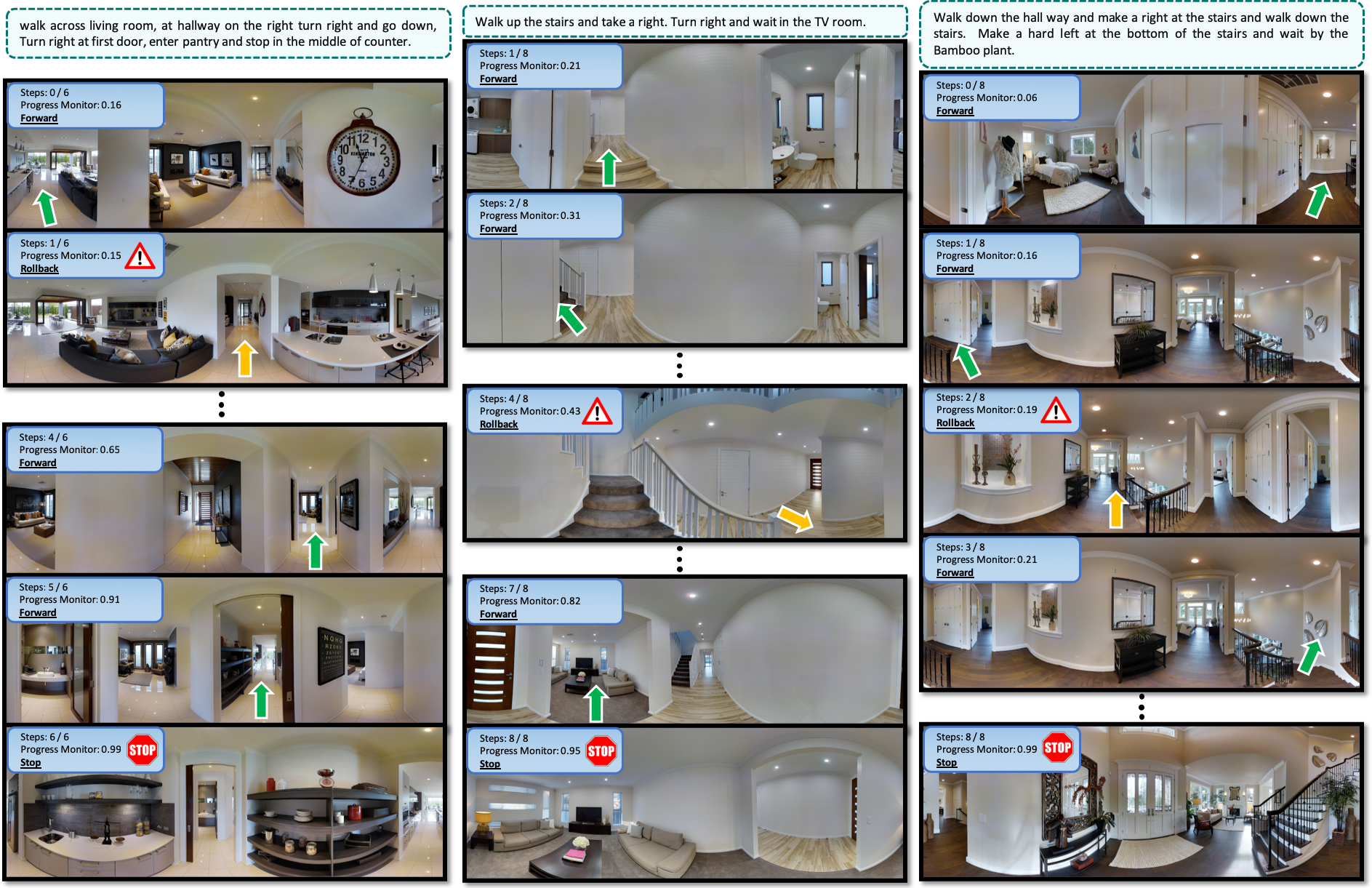

The below figure shows the qualitative outputs of our model during successful navigation in unseen environments. In the example at the left side, the agent made a mistake at the first step, and the estimated progress at the second step slightly decreases. The agent then decides to rollback, after which the progress monitor significantly increases. Finally, the agent stopped correctly as instructed. At the middle, we show an example where the agent correctly goes up the stairs but incorrectly does it again rather than turning and finding the TV as instructed. Note that the progress monitor increases but only by a small amount; this demonstrates the need for learned mechanisms that can reason about the textual and visual grounding and context, as well as the resulting level of change in progress. In this case the agent then correctly decides to rollback and successfully walked into the TV room. At the right side, the agent misses the stairs, resulting in a very small progress increase. The agent decides to rollback as a result. Upon reaching the goal, the agent’s progress estimate is 99%. Please refer to the Appendix for the full trajectories and unsuccessful examples.

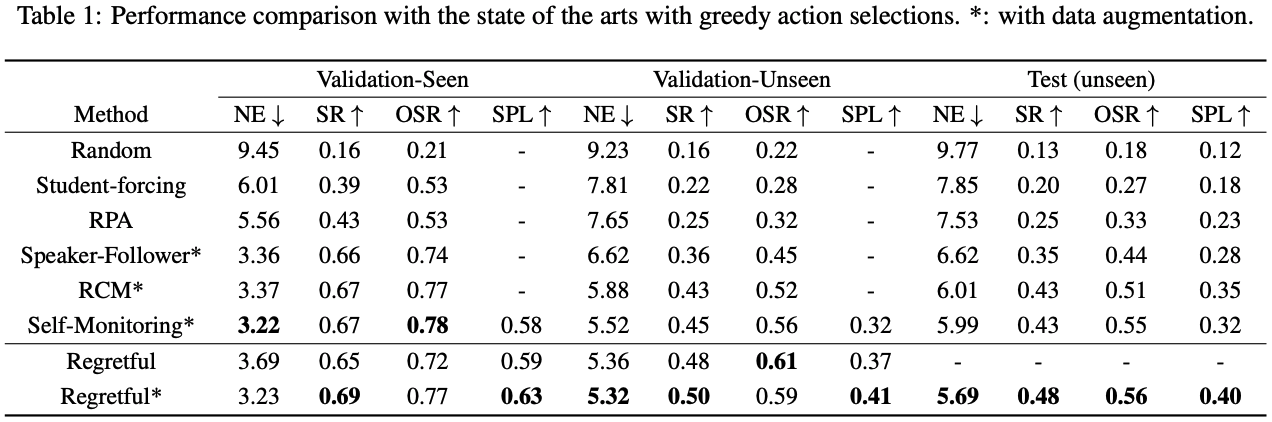

We evaluate our proposed Regretful Agent on the Room-to-Room dataset for Vision-and-Language Navigation task. our method achieves significant performance improvement over the existing approaches. We achieved 37% SPL and 48% SR on the validation unseen set and outperformed all existing work. Our best performing model achieves 41% SPL and 50% SR on validation unseen set when trained with the synthetic data. We demonstrate absolute 8% SPL improvement and 5% SR improvement on the test server over the current state-of-the-art method.

If you find this work useful, please cite our paper:

@inproceedings{ma2019theregretful,

title={The Regretful Agent: Heuristic-Aided Navigation through Progress Estimation},

author={Ma, Chih-Yao and Wu, Zuxuan and AlRegib, Ghassan and Xiong, Caiming and Kira, Zsolt},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019},

url={https://arxiv.org/abs/1903.01602},

}